Research

My research lies in the intersection of data privacy, software security, machine learning and algorithmic fairness. The overarching goal of my work is to protect sensitive personal information from being leaked in unintended ways. My current research focuses on differential privacy and its interactions with software security, formal verification, numerical optimization, statistical inference and machine learning.

Some of my current projects include:

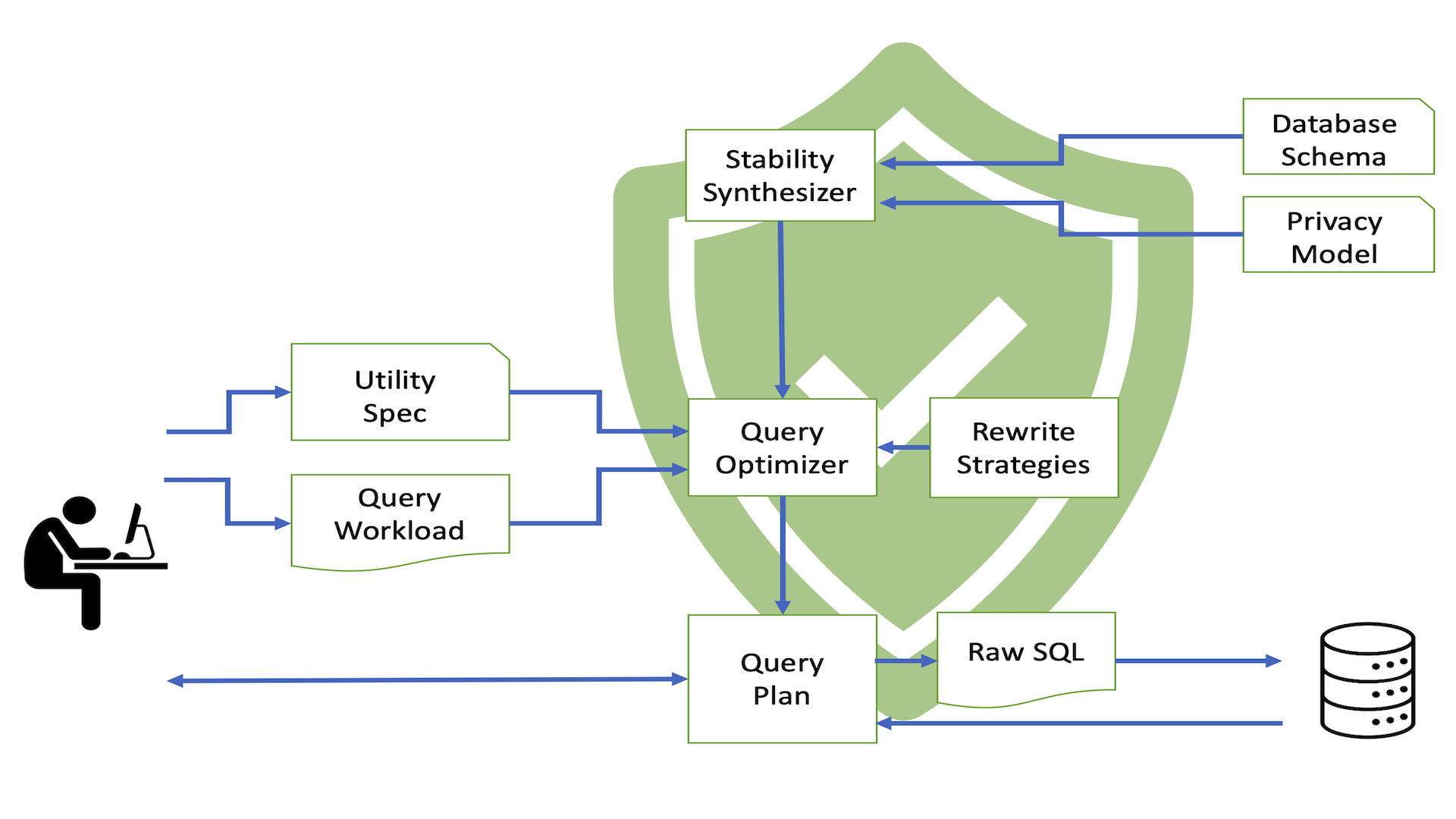

Extensible Differentially Private SQL System

Key features:

- Allows a user to write code that explains how a set of query answers is intended to be used.

- Allows a database administrator to customize the required differential privacy plausible deniability guarantees.

- Uses formal methods and programming languages technology to synthesize and verify key components such as the sensitivity & stability of data transformation operators.

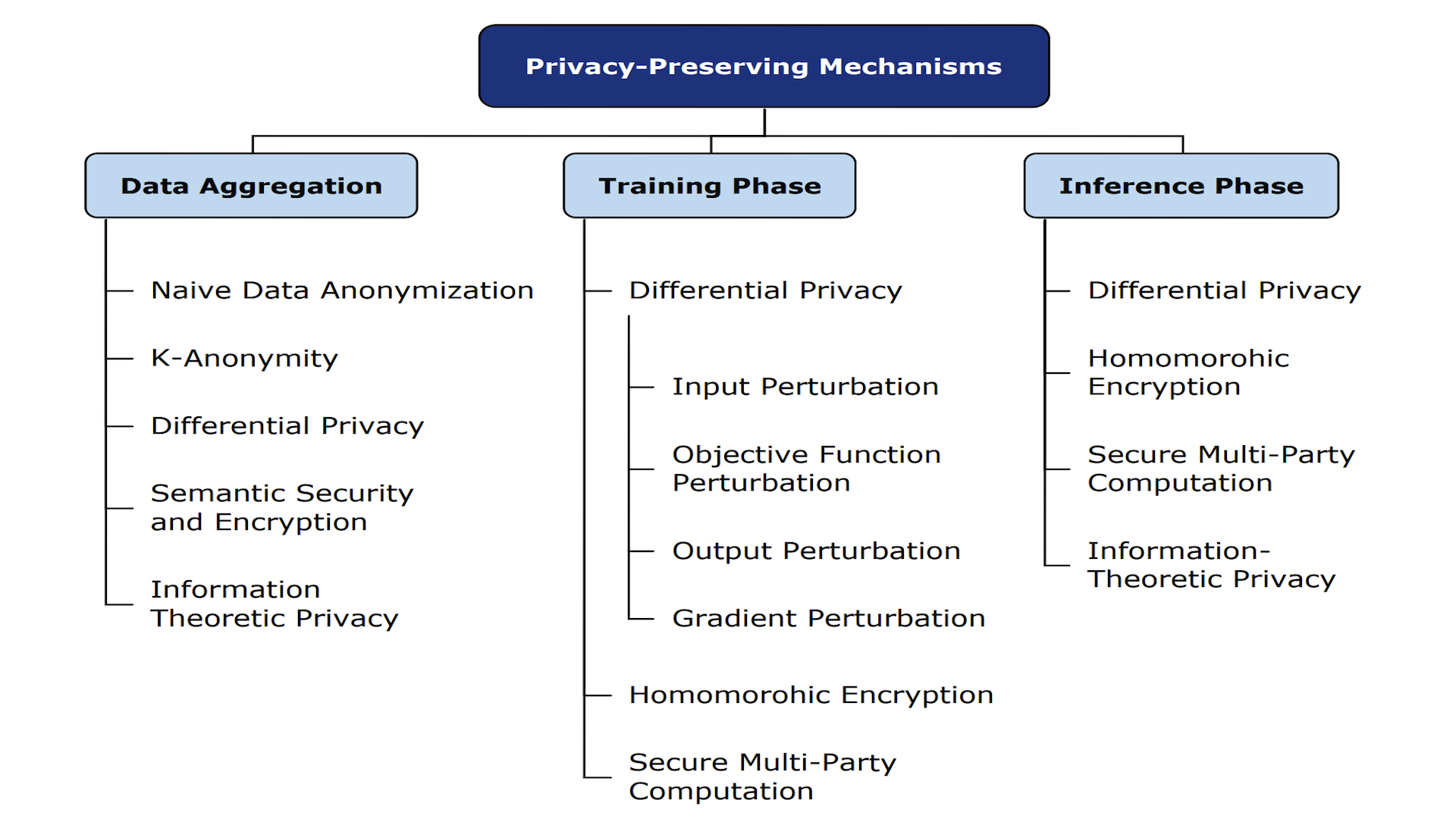

Private Machine Learning

Image from Privacy in Deep Learning: A Survey by Mireshghallah et al.

Objectives

- To understand the risks of privacy leakage in deep learning models and AR/VR systems

- To detect inductive biases in ML models and other decision making algorithms

- To devise strategies to defend against privacy leakages and promote algorithmic fairness

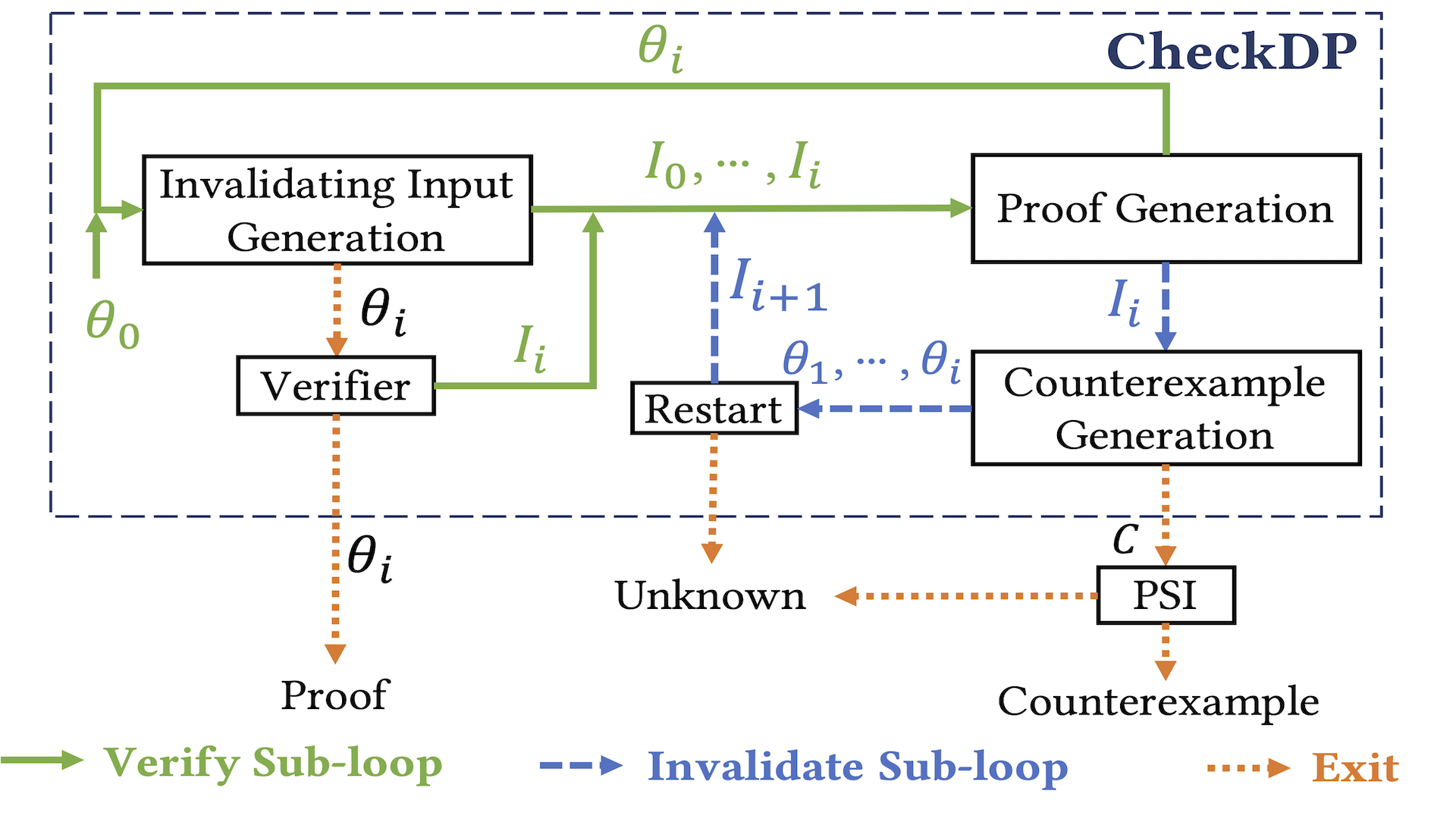

Verification and Synthesis of Differentially Private Programs

Image from our CheckDP paper

Objectives

- To show incorrectness for buggy programs by generating counterexamples (inputs that trigger bugs) for them

- To generate machine proofs for correct programs using formal verification and programming language tools

- To synthesize a private version given a non-private program